#Vertex AI pipelines

Explore tagged Tumblr posts

Text

Vertex AI: Transformando o Desenvolvimento de IA com Eficiência e Escalabilidade

O Vertex AI é uma solução inovadora do Google que vem transformando a forma como organizações desenvolvem e implantam modelos de aprendizado de máquina (ML) e inteligência artificial (IA). Com uma plataforma integrada e ferramentas avançadas, o Vertex AI oferece recursos para empresas otimizarem suas operações com eficiência e escalabilidade. Neste artigo, exploraremos como o Vertex AI funciona,…

#Google Vertex AI#Vertex AI#Vertex AI API#Vertex AI AutoML#Vertex AI como usar#Vertex AI configurações#Vertex AI dashboard#Vertex AI deploy#Vertex AI e BigQuery#Vertex AI e TensorFlow#Vertex AI exemplos#Vertex AI explicação#Vertex AI Google Cloud#Vertex AI integração#Vertex AI inteligência artificial#Vertex AI introdução#Vertex AI machine learning#Vertex AI ML Ops#Vertex AI modelos#Vertex AI modelos pré-treinados#Vertex AI no-code#Vertex AI para iniciantes#Vertex AI pipelines#Vertex AI predição#Vertex AI Python#Vertex AI recursos#Vertex AI treinamento#Vertex AI tutorial#Vertex AI tutorial completo#Vertex AI vantagens

0 notes

Text

BigQuery Studio From Google Cloud Accelerates AI operations

Google Cloud is well positioned to provide enterprises with a unified, intelligent, open, and secure data and AI cloud. Dataproc, Dataflow, BigQuery, BigLake, and Vertex AI are used by thousands of clients in many industries across the globe for data-to-AI operations. From data intake and preparation to analysis, exploration, and visualization to ML training and inference, it presents BigQuery Studio, a unified, collaborative workspace for Google Cloud’s data analytics suite that speeds up data to AI workflows. It enables data professionals to:

Utilize BigQuery’s built-in SQL, Python, Spark, or natural language capabilities to leverage code assets across Vertex AI and other products for specific workflows.

Improve cooperation by applying best practices for software development, like CI/CD, version history, and source control, to data assets.

Enforce security standards consistently and obtain governance insights within BigQuery by using data lineage, profiling, and quality.

The following features of BigQuery Studio assist you in finding, examining, and drawing conclusions from data in BigQuery:

Code completion, query validation, and byte processing estimation are all features of this powerful SQL editor.

Colab Enterprise-built embedded Python notebooks. Notebooks come with built-in support for BigQuery DataFrames and one-click Python development runtimes.

You can create stored Python procedures for Apache Spark using this PySpark editor.

Dataform-based asset management and version history for code assets, including notebooks and stored queries.

Gemini generative AI (Preview)-based assistive code creation in notebooks and the SQL editor.

Dataplex includes for data profiling, data quality checks, and data discovery.

The option to view work history by project or by user.

The capability of exporting stored query results for use in other programs and analyzing them by linking to other tools like Looker and Google Sheets.

Follow the guidelines under Enable BigQuery Studio for Asset Management to get started with BigQuery Studio. The following APIs are made possible by this process:

To use Python functions in your project, you must have access to the Compute Engine API.

Code assets, such as notebook files, must be stored via the Dataform API.

In order to run Colab Enterprise Python notebooks in BigQuery, the Vertex AI API is necessary.

Single interface for all data teams

Analytics experts must use various connectors for data intake, switch between coding languages, and transfer data assets between systems due to disparate technologies, which results in inconsistent experiences. The time-to-value of an organization’s data and AI initiatives is greatly impacted by this.

By providing an end-to-end analytics experience on a single, specially designed platform, BigQuery Studio tackles these issues. Data engineers, data analysts, and data scientists can complete end-to-end tasks like data ingestion, pipeline creation, and predictive analytics using the coding language of their choice with its integrated workspace, which consists of a notebook interface and SQL (powered by Colab Enterprise, which is in preview right now).

For instance, data scientists and other analytics users can now analyze and explore data at the petabyte scale using Python within BigQuery in the well-known Colab notebook environment. The notebook environment of BigQuery Studio facilitates data querying and transformation, autocompletion of datasets and columns, and browsing of datasets and schema. Additionally, Vertex AI offers access to the same Colab Enterprise notebook for machine learning operations including��MLOps, deployment, and model training and customisation.

Additionally, BigQuery Studio offers a single pane of glass for working with structured, semi-structured, and unstructured data of all types across cloud environments like Google Cloud, AWS, and Azure by utilizing BigLake, which has built-in support for Apache Parquet, Delta Lake, and Apache Iceberg.

One of the top platforms for commerce, Shopify, has been investigating how BigQuery Studio may enhance its current BigQuery environment.

Maximize productivity and collaboration

By extending software development best practices like CI/CD, version history, and source control to analytics assets like SQL scripts, Python scripts, notebooks, and SQL pipelines, BigQuery Studio enhances cooperation among data practitioners. To ensure that their code is always up to date, users will also have the ability to safely link to their preferred external code repositories.

BigQuery Studio not only facilitates human collaborations but also offers an AI-powered collaborator for coding help and contextual discussion. BigQuery’s Duet AI can automatically recommend functions and code blocks for Python and SQL based on the context of each user and their data. The new chat interface eliminates the need for trial and error and document searching by allowing data practitioners to receive specialized real-time help on specific tasks using natural language.

Unified security and governance

By assisting users in comprehending data, recognizing quality concerns, and diagnosing difficulties, BigQuery Studio enables enterprises to extract reliable insights from reliable data. To assist guarantee that data is accurate, dependable, and of high quality, data practitioners can profile data, manage data lineage, and implement data-quality constraints. BigQuery Studio will reveal tailored metadata insights later this year, such as dataset summaries or suggestions for further investigation.

Additionally, by eliminating the need to copy, move, or exchange data outside of BigQuery for sophisticated workflows, BigQuery Studio enables administrators to consistently enforce security standards for data assets. Policies are enforced for fine-grained security with unified credential management across BigQuery and Vertex AI, eliminating the need to handle extra external connections or service accounts. For instance, Vertex AI’s core models for image, video, text, and language translations may now be used by data analysts for tasks like sentiment analysis and entity discovery over BigQuery data using straightforward SQL in BigQuery, eliminating the need to share data with outside services.

Read more on Govindhtech.com

#BigQueryStudio#BigLake#AIcloud#VertexAI#BigQueryDataFrames#generativeAI#ApacheSpark#MLOps#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Link

0 notes

Text

#art#machinelearning#deeplearning#artificialintelligence#datascience#iiot#data#MLsoGood#code#python#bigdata#MLart#algorithm#programmer#pytorch#DataScientist#Analytics#AI#VR#iot#TechCult#Digitalart#DigitalArtMarket#ArtMarket#DataArt#ArtTech#GAN#GANart#arttech#aiart

1 note

·

View note

Text

Google Cloud Platform Development- Create your business!

End-to-End GCP app development

We have a wealth of experience in developing high-end, user-friendly and scalable software solutions that are powered by Google Compute Engine. We provide a complete and reliable experience for developing apps starting with infrastructure, and moving through deployment and administration.

Infrastructure

Continuous integration (CI) and continuous delivery systems are created by integrating a set of Google Cloud tools. Then we can deploy them the CI and continuous delivery systems to Google Kubernetes Engine (GKE).

Design

Google Cloud services are extremely reliable and effective. We employ specific design patterns for industry to develop solutions that are effective. We offer consistent RESTful APIs that are consistent in their design.

Deployment

To create automated modern deployment pipelines we utilize Google Cloud Platform tools. Through GKE we also enable ASP.NET applications in Windows containers to Google Cloud through GKE

Compute Engine Consultancy and Setup

We help companies plan and implementing their strategy for solution. Virtual Machines are developed by deciding and deploying the most efficient Compute Engine resources.

Deployment Strategy

Compute Engine provides a variety of options for deployment for companies. We provide consulting services to assist businesses in choosing the most effective strategy.

Assessment

We analyze your most important requirements and weigh the advantages and disadvantages of each option for deployment before suggesting the one most suitable for your company.

Set-up

We are aware of the benefits and advantages of various deployment options for Compute engines, and we have set up Virtual Machines accordingly.

Data & Analytics

We help enterprises plan and implementing Bog processes for data using GCP. We assist by creating comprehensive lists of the most important requirements, database dependencies , and user groups and also the data that needs to be transferred. We develop a complete and complete pipeline for analysis of data and Machine Learning.

Data Ingestion

We track data ingestion by using various datasets as the initial stage of the data analytics and the life-cycle of machine learning.

Processing data

We analyze the raw data using BigQuery and Dataproc Then, we examine the data using the Cloud console.

Implement & Deploy

Vertex AI Workbench, a user-managed notebooks are used to carry out feature engineering. The team then implements an algorithm for machine learning.

GCP Migration

We help enterprises migrate and upgrading their mobile application backends using Google App Engine. We analyze, review and choose the most effective tools for your needs.

Architectures

We help you transition to an infrastructure that is minimally affecting the current operations. We transfer safe, quality-controlled data , and make sure that the existing processes, tools and updates can be used again.

Cloud Storage environments

We’ll prepare your cloud storage system and assist you select the best tool for your needs.

Pipelines

Pipelines are utilized to transfer and load data from various sources. Our team chooses the best method for migration among the various options.

Google’s specific solutions for your industry can power your apps.

Industries supported by app development companies:

Retail

Our team of retail app developers are dedicated to helping retailers make the most of their apps by using the most cutting-edge technology and tools available in the market.

Travel

Our track record is impressive in the development of mobile apps for travel, which allows hospitality businesses to provide their customers the most exceptional experience in the industry.

Fintech

We are changing the traditional financial and banking infrastructure. Fintech applications have the latest technology that are currently ruling FinTech.

Healthcare

We are known for our healthcare services that are HIPAA-compliant and consumer-facing and innovative digital ventures for companies with a the goal of transforming healthcare through digital.

SaaS

Our SaaS service for product development includes an extensive SaaS strategy. It is crucial to utilize functional and architectural building blocks to support SaaS platform development.

Fitness & Sports

Since 2005, we’ve developed UX-driven, premium fitness and sports software. Our team develops new solutions to improve fitness through an agile, user-focused method. Gain competitive edge by harnessing the value of business.

FAQs

1. What exactly is cloud computing?

Cloud computing is the term used to describe computing power and services which include networking, storage, computing databases, and software. It is also referred to as “the cloud” or the Internet. The cloud computing service is accessible all over the world and is does not have any geographical restrictions.

2. What exactly is Google Cloud?

Google Cloud Platform, or GCP, is a cloud-based platform developed by Google. It gives access to Google’s cloud-based systems as well as computing services. It also offers a variety of services that are available in the domains of cloud computing, including computing storage, networking, migration and networking.

3. What security features can cloud computing provide?

Cloud computing comes with a number of vital security features, such as:

Control of access: This provides the user with control. They are able to grant access to others joining the cloud ecosystem.

Identity management It allows you to authorise different services using your identity

Authorization and authentication: This is a security function that permits only authenticated and authorized users to gain access to data and applications.

4. Which other cloud computing platforms are there to develop enterprise-level applications?

Amazon Web Services, Azure (link) and a variety of different cloud computing platforms are accessible for development at the enterprise level. To determine which cloud platform is suitable for your project, speak with us (Insert the contact information).

Google Cloud solutions let you create and inspire using Google Cloud solutions

Read more on Google Cloud Platform Development – https://markovate.com/google-cloud-platform/

0 notes

Text

Digitec Galaxus chooses Google Recommendations AI

Digitec Galaxus chooses Google Recommendations AI

The architecture covers three phases of generating context-driven ML predictions, including: ML Development: Designing and building the ML models and pipeline Vertex Notebooks are used as data science environments for experimentation and prototyping. Notebooks are also used to implement model training, scoring components, and pipelines. The source code is version controlled in Github. A…

View On WordPress

0 notes

Text

NICE unveils five year plan promising faster access to medicines

NICE has included proposals to speed up evaluations and focus on new technology such as digital health in a new strategy to provide faster access to new medical treatments and innovations.

The cost-effectiveness body has produced a new vision for the next five years, after reflecting on lessons learned during the COVID-19 pandemic.

NICE said the pandemic showed the importance of swiftness and flexibility and embracing new forms of innovation in healthcare technology.

In a strategy document, NICE said its purpose will not change, but it will try and improve the speed and efficiency of its technology evaluations.

New treatments will be assessed at pace and guidelines could be changed to reflect the latest evidence instead of reviews every few years.

NICE will focus on ensuring guidance is used in NHS organisations so that patients get access to the latest therapies and “real world” data will be used to resolve gaps in knowledge and improve access to new technology.

Since it began work around 22 years ago NICE has seen its reputation across the world grow, with its role and remit growing.

But it has also faced criticism from pharma companies that say it undervalues medicines – patients in the country were denied access to the expensive cystic fibrosis drug Orkambi for four years because of a pricing row with NICE and the NHS.

While Vertex was aggressive in its pursuit of a better price for Orkambi, no-one involved emerged looking good, with despairing patient groups pointing out that children were suffering and dying because of the delay accessing the potentially life-extending drug.

NICE will seek to avoid this kind of stand-off with proactive engagement with pharma companies earlier in the development pipeline.

It will also create guidance that has a more proactive, recognising the value of new technologies classes such as diagnostics, advanced therapy medicinal products and digital health.

NICE has been consulting on proposals to change its cost-effectiveness methods since November last year, including expanding technology reviews beyond Quality Adjusted Life Year (QALY) assessments to include other factors such as the severity of a condition and how technologies reduce health inequalities.

Professor Gillian Leng CBE, NICE chief executive, said: “Our work to produce rapid COVID-19 guidelines during the pandemic has hastened our desire for change. We demonstrated that we can be flexible and fleet of foot, without losing the rigour of our work, and we will now look to embed that approach in our day-to-day work.

“The world around us is changing. New treatments and technologies are emerging at a rapid pace, with real-world data driving a revolution in evidence. We will help busy healthcare professionals to navigate these new changes and ensure patients have access to the best care and latest treatments.”

NICE chairman Sharmila Nebhrajani OBE, said: “The healthcare of the future will look radically different from today – new therapies will combine pills with technologies, genomic medicine will make early disease detection a reality and AI and machine learning will bring digital health in disease prevention and self care to the fore.

“Our new strategy will help us respond to these advances, finding new and more flexible ways to evaluate products and therapies for use in the NHS, ensuring that the most innovative and clinically effective treatments are available to patients at a price the taxpayer can afford.”

The post NICE unveils five year plan promising faster access to medicines appeared first on .

from https://pharmaphorum.com/news/nice/

0 notes

Text

Unite 2018 report

Introduction

A few Wizcorp engineers participated in Unite Tokyo 2018 in order to learn more about the future of Unity and how to use it for our future projects. Unite Tokyo is a 3-day event held by Unity in different major cities, including Seoul, San Francisco and Tokyo. It takes the form of conferences made by various Unity employees around the globe, where they give an insight on some existing or future technologies and teach people about them. You can find more information about Unite here.

In retrospective, here is a summary of what we’ve learned or found exciting, and that could be useful for the future of Wizcorp.

Introduction first day

The presentation on ProBuilder was very interesting. It showed how to quickly make levels in a way similar to Tomb Raider for example. You can use blocks, slopes, snap them to grid, quickly add prefabs inside and test all without leaving the editor, speeding up the development process tremendously.

They made a presentation on ShaderGraph. You may already be aware about it, but in case you’re not, it’s worth checking it out.

They talked about the lightweight pipeline, which provides a new modular architecture to Unity, in the goal of getting it to run on smaller devices. In our case, that means that we could get a web app in something as little as 72 kilobytes! If it delivers as expected (end of 2018), it may seriously compromise the need to stick to web technologies.

They showed a playable web ad that loads and plays within one second over wifi. It then drives the player to the App Store. They think that this is a better way to advertise your game.

They have a new tool set for the automotive industry, allowing to make very good looking simulations with models from real cars.

They are making Unity Hack Week events around the globe. Check that out if you are not aware about it.

They introduced the Burst compiler, which aims to take advantage of the multi-core processors and generates code with math and vector floating point units in mind, optimizing for the target hardware and providing substantial runtime performance improvements.

They presented improvements in the field of AR, typically with a game that is playing on a sheet that you’re holding on your hand.

Anime-style rendering

They presented the processes that they use in Unity to approach as close as possible Anime style rendering, and the result was very interesting. Nothing is rocket science though, it includes mostly effects that you would use in other games, such as full screen distortion, blur, bloom, synthesis on an HDR buffer, cloud shading, a weather system through usage of fog, skybox color config and fiddling with the character lighting volume.

Optimization of mobile games by Bandai Namco

In Idolmaster, a typical stage scene has 15k polygons only, and a character has a little more than that. They make the whole stage texture fit on a 1024x1024 texture for performance.

For post processing, they have DoF, bloom, blur, flare and 1280x720 as a reference resolution (with MSAA).

The project was started as an experiment in April of 2016, then was started officially in January of 2017, then released on June 29th of the same year.

They mentioned taking care about minimizing draw calls, calls to SetPassCall(DrawCall).

They use texture atlases with index vertex buffers to reduce memory and include performance.

They used the snapdragon profiler to optimise for the target platforms. They would use an approach where they try, improve, try again and then stop when it’s good enough.

One of the big challenges was to have lives with 13 people (lots of polys / info).

Unity profiling and performance improvements

This presentation was made by someone who audits commercial games and gives them support on how to improve the performance or fix bugs.

http://github.com/MarkUnity/AssetAuditor

Mipmaps add 33% to texture size, try to avoid.

Enabling read/write in a texture asset always adds 50% to the texture size since it needs to remain in main memory. Same for meshes.

Vertex compression (in player settings) just uses half precision floating points for vertices.

Play with animation compression settings.

ETC Crunch textures are decrunched on the CPU, so be careful about the additional load.

Beware about animation culling: when offscreen, culled animations will not be processed (like disabled), and with non-deterministic animations this means that if disabled, when it’s enabled again, it will have to be computed for all the time where it was disabled, which may create a huge CPU peak (can happen when disabling and then re-enabling an object too).

Presentation of Little Champions

Looks like a nice game.

Was started on Unity 5.x and was then ported on to Unity 2017.x.

They do their own custom physics processes, by using WaitForFixedUpdate from within FixedUpdate. The OnTriggerXXX and OnCollisionXXX handlers are called afterwards.

They have a very nice level editor for iPad that they used during development. They say it was the key to creating nice puzzle levels, to test them quickly, fix and try again, all from the final device where the game is going to be run on.

Machine learning

A very interesting presentation that showed how to teach a computer to play a simple Wipeout clone. It was probably the simplest you could get it (since you only play left or right, and look out for walls using 8 ray casts.

I can enthusiastically suggest that you read about machine learning yourself, since there’s not really room for a full explanation of the concepts approached there in this small article. But the presenter was excellent.

Some concepts:

You have two training methods: one is reinforcement learning (where you learn through rewards, trial and error, super-speed simulation, so that the agent becomes “mathematically optimal” at task) and one is imitation learning (like humans, learning through demonstrations, without rewards, requiring real-time interaction).

You can also use cooperative agents (one brain -- the teacher, and two agents -- like players, or hands -- playing together towards a given goal).

Learning environment: Agent <- Brain <- Academy <- Tensorflow (for training AIs).

Timeline

Timeline is a plugin for Unity that is designed to create animations that manipulate the entire scene based on time, a bit like Adobe Premiere™.

It consists of tracks, with clips which animate properties (a bit like the default animation system). It’s very similar but adds a lot of features that are more aimed towards creating movies (typically for cut scenes). For example, animations can blend among each other.

The demo he showed us was very interesting, it used it to create a RTS game entirely.

Every section would be scripted (reaction of enemies, cut scenes, etc.) and using conditions the track head would move and execute the appropriate section of scripted gameplay.

He also showed a visual novel like system (where input was waited on to proceed forward).

He also showed a space shooter. The movement and patterns of bullet, enemies, then waves and full levels would be made into tracks, and those tracks would be combined at the appropriate hierarchical level.

Ideas of use for Timeline: rhythm game, endless runner, …

On a personal note I like his idea: he gave himself one week to try creating a game using as much as possible this technology so that he could see what it’s worth.

What was interesting (and hard to summarize in a few lines here, but I recommend checking it out) is that he uses Timeline alternatively to dictate the gameplay and sometimes for the opposite. Used wisely it can be a great game design tool, to quickly build a prototype.

Timeline is able to instantiate objects, read scriptable objects and is very extensible.

It’s also used for programmers or game designers to quickly create the “scaffoldings” of a scene and give that to the artists and designers, instead of having them to guess how long each clip should take, etc.

Another interesting feature of Timeline is the ability to start or resume at any point very easily. Very handy in the case of the space shooter to test difficulty and level transitions for instance.

Suggest downloading “Default Playables” in the Asset Store to get started with Timeline.

Cygames: about optimization for mid-range devices

Features they used

Sun shaft

Lens flare (with the use of the Unity collision feature for determining occlusion, and it was a challenge to set colliders properly on all appropriate objects, including for example the fingers of a hand)

Tilt shift (not very convincing, just using the depth information to blur in post processing)

Toon rendering

They rewrote the lighting pipeline rendering entirely and compacted various maps (like the normal map) in the environment maps.

They presented where ETC2 is appropriate over ETC, which is basically that it reduces color banding, but takes more time to compress at the same quality and is not supported on older devices, and this was why they chose to not use it until recently.

Other than that, they mentioned various techniques that they used on the server side to ensure a good framerate and responsiveness. Also they mentioned that they reserved a machine with a 500 GB hard drive just for the Unity Cache Server.

Progressive lightmapper

The presentation was about their progress on the new lightmapper engine from which we already got a video some time ago (link below). This time, the presenter did apply that to a small game that he was making with a sort of toon-shaded environment. He showed what happens with the different parameters and the power of the new lighting engine.

A video: https://www.youtube.com/watch?v=cRFwzf4BHvA

This has to be enabled in the Player Settings (instead of the Enlighten engine).

The big news is that lights become displayed in the editor directly (instead of having to start the game, get Unity to bake them, etc.).

The scene is initially displayed without lights and little by little as they become available textures are updated with baked light information. You can continue to work meanwhile.

Prioritize view option: bakes what's visible in the camera viewport view first (good for productivity, works just as you’d expect it).

He explained some parameters that come into action when select the best combination for performance vs speed:

Direct samples -> simply vectors from a texel (pixel on texture) to all the lights, if it finds a light it's lit, if it's blocked it's not lit.

Indirect samples: they bounce (e.g. emitted from ground, then bounces on object, then on skybox).

Bounces: 1 should be enough on very open scenes, else you might need more (indoor, etc.).

Filtering smoothes out the result of the bake. Looks cartoonish.

They added the A-Trous blur method (preserves edges and AO).

Be careful about UV charts, which controls how Unity divides objects (based on their normal, so each face of a cube would be in a different UV chart for example), and stops at the end of a chart, to create a hard edge. More UV maps = more “facetted” render (like low-poly). Note that for a big number of UV maps, the object will become round again, because the filtering will blur everything.

Mixed modes: normally lights are either realtime or baked.

3 modes: subtractive (subtract shadows with a single color; can appear out of place), shadowmask: bake into separate lightmaps, so that we can recolor them; still fast and flexible, and the most expensive one where all is done dynamically (useful for the sunlight cycle for example), and distance shadowmask uses dynamic only for objects close to the camera, else baked lightmaps.

The new C# Job system

https://unity3d.com/unity/features/job-system-ECS ← available from Unity 2018.1, along with the new .NET 4.x.

They are slowly bringing concepts from entity / component into Unity.

Eventually they’ll phase out the GameObject, which is too central and requires too much stuff to be mono-threaded.

They explain why they made the choice

Let’s take a list of GameObject’s each having a Transform, a Collider and a RigidBody. Those parts are laid out in the memory sequentially, object per object. A Transform is actually a lot of properties, so accessing only a few of the properties of a Transform in many objects (like particles) will be inefficient for cache accesses.

With the entity/component system, you need to request for the members that you are accessing, and it can be optimized for that. It can also be multi-threaded properly. All that is combined with the new Burst compiler, which generates more performant code based on the hardware.

Entities don't appear in the hierarchy, like Game Objects do.

In his demo, he manages to display 80,000 snowflakes in the editor instead of 13,000.

Here is some example code:

public struct SnowflakeData: IComponentData { public float FallSpeedValue; public float RotationSpeedValue; } public class SnowflakeSystem: JobComponentSystem { private struct SnowMoveJob: IJobProcessComponentData { public float DeltaTime; public void Execute(ref Position pos, ref Rotation pos, ref SnowflakeData data) { pos.Value.y -= data.FallSpeedValue * DeltaTime; rot.Value = math.mul(math.normalize(rot.Value), math.axisAngle(math.up(), data.RotationSpeedValue * DeltaTime)); } } protected override JobHandle OnUpdate(JobHandle inputDeps) { var job = new SnowMoveJob { DeltaTime = Time.DeltaTime }; return job.Schedule(this, 64, inputDeps); } } public class SnowflakeManager: MonoBehaviour { public int FlakesToSpawn = 1000; public static EntityArchetype SnowFlakeArch; [RuntimeInitializeOnLoadMethod(RuntimeInitializeLoadType.BeforeSceneLoad)] public static void Initialize() { var entityManager = World.Active.GetOrCreateManager(); entityManager.CreateArchetype( typeof(Position), typeof(Rotation), typeof(MeshInstanceRenderer), typeof(TransformMatrix)); } void Start() { SpawnSnow(); } void Update() { if (Input.GetKeyDown(KeyCode.Space)) { SpawnSnow(); } } void SpawnSnow() { var entityManager = World.Active.GetOrCreateManager(); NativeArray snowFlakes = new NativeArray(FlakesToSpawn, Allocator.Temp); // temporary allocation, so that we can dispose of it afterwards entityManager.CreateEntity(SnowFlakeArch, snowFlakes); for (int i = 0; i < FlakesToSpawn; i++) { entityManager.SetComponentData(snowFlakes[i], new Position { Value = RandomPosition() }); // RandomPosition made by the presenter entityManager.SetSharedComponentData(snowFlakes[i], new MeshInstanceRenderer { material = SnowflakeMat, ... }); entityManager.AddComponentData(snowFlakes[i], new SnowflakeData { FallSpeedValue = RandomFallSpeed(), RotationSpeedValue = RandomFallSpeed() }); } // Dispose of the array snowFlakes.Dispose(); // Update UI (variables made by the presenter) numberOfSnowflakes += FlakesToSpawn; EntityDisplayText.text = numberOfSnowflakes.ToString(); } }

Conclusion

We hope that you enjoyed reading this little summary of some of the presentations which we attended to.

As a general note, I would say that Unite is an event aimed at hardcore Unity fans. There is some time for networking with Unity engineers between the sessions (who come from all around the world), and not many beginners. It can be a good time to extend professional connections with (very serious) people from the Unity ecosystem, and not great for recruiting for instance. But you have to go for it and make it happen. By default the program will just have you follow sessions one after each other with value that is probably similar to what you would have by watching official presentations in a few weeks or months from now on YouTube. I’m a firm believer that socializing is way better than watching videos from home, so you won’t get me saying that it’s a waste of time, but if you are to send people there the best is when they are proactive and passionate themselves about Unity. If they just use it at work, I feel that the value is rather small, and I would even dare that it’s a bit a failure from the Unity team, as it can be hard to see who they are targeting.

There is also the Unite party, which you have to book way before, that may improve value for networking, but none of us could attend.

1 note

·

View note

Text

Boost AI Production With Data Agents And BigQuery Platform

Data accessibility can hinder AI adoption since so much data is unstructured and unmanaged. Data should be accessible, actionable, and revolutionary for businesses. A data cloud based on open standards, that connects data to AI in real-time, and conversational data agents that stretch the limits of conventional AI are available today to help you do this.

An open real-time data ecosystem

Google Cloud announced intentions to combine BigQuery into a single data and AI use case platform earlier this year, including all data formats, numerous engines, governance, ML, and business intelligence. It also announces a managed Apache Iceberg experience for open-format customers. It adds document, audio, image, and video data processing to simplify multimodal data preparation.

Volkswagen bases AI models on car owner’s manuals, customer FAQs, help center articles, and official Volkswagen YouTube videos using BigQuery.

New managed services for Flink and Kafka enable customers to ingest, set up, tune, scale, monitor, and upgrade real-time applications. Data engineers can construct and execute data pipelines manually, via API, or on a schedule using BigQuery workflow previews.

Customers may now activate insights in real time using BigQuery continuous queries, another major addition. In the past, “real-time” meant examining minutes or hours old data. However, data ingestion and analysis are changing rapidly. Data, consumer engagement, decision-making, and AI-driven automation have substantially lowered the acceptable latency for decision-making. The demand for insights to activation must be smooth and take seconds, not minutes or hours. It has added real-time data sharing to the Analytics Hub data marketplace in preview.

Google Cloud launches BigQuery pipe syntax to enable customers manage, analyze, and gain value from log data. Data teams can simplify data conversions with SQL intended for semi-structured log data.

Connect all data to AI

BigQuery clients may produce and search embeddings at scale for semantic nearest-neighbor search, entity resolution, semantic search, similarity detection, RAG, and recommendations. Vertex AI integration makes integrating text, photos, video, multimodal data, and structured data easy. BigQuery integration with LangChain simplifies data pre-processing, embedding creation and storage, and vector search, now generally available.

It previews ScaNN searches for large queries to improve vector search. Google Search and YouTube use this technology. The ScaNN index supports over one billion vectors and provides top-notch query performance, enabling high-scale workloads for every enterprise.

It is also simplifying Python API data processing with BigQuery DataFrames. Synthetic data can replace ML model training and system testing. It teams with Gretel AI to generate synthetic data in BigQuery to expedite AI experiments. This data will closely resemble your actual data but won’t contain critical information.

Finer governance and data integration

Tens of thousands of companies fuel their data clouds with BigQuery and AI. However, in the data-driven AI era, enterprises must manage more data kinds and more tasks.

BigQuery’s serverless design helps Box process hundreds of thousands of events per second and manage petabyte-scale storage for billions of files and millions of users. Finer access control in BigQuery helps them locate, classify, and secure sensitive data fields.

Data management and governance become important with greater data-access and AI use cases. It unveils BigQuery’s unified catalog, which automatically harvests, ingests, and indexes information from data sources, AI models, and BI assets to help you discover your data and AI assets. BigQuery catalog semantic search in preview lets you find and query all those data assets, regardless of kind or location. Users may now ask natural language questions and BigQuery understands their purpose to retrieve the most relevant results and make it easier to locate what they need.

It enables more third-party data sources for your use cases and workflows. Equifax recently expanded its cooperation with Google Cloud to securely offer anonymized, differentiated loan, credit, and commercial marketing data using BigQuery.

Equifax believes more data leads to smarter decisions. By providing distinctive data on Google Cloud, it enables its clients to make predictive and informed decisions faster and more agilely by meeting them on their preferred channel.

Its new BigQuery metastore makes data available to many execution engines. Multiple engines can execute on a single copy of data across structured and unstructured object tables next month in preview, offering a unified view for policy, performance, and workload orchestration.

Looker lets you use BigQuery’s new governance capabilities for BI. You can leverage catalog metadata from Looker instances to collect Looker dashboards, exploration, and dimensions without setting up, maintaining, or operating your own connector.

Finally, BigQuery has catastrophe recovery for business continuity. This provides failover and redundant compute resources with a SLA for business-critical workloads. Besides your data, it enables BigQuery analytics workload failover.

Gemini conversational data agents

Global organizations demand LLM-powered data agents to conduct internal and customer-facing tasks, drive data access, deliver unique insights, and motivate action. It is developing new conversational APIs to enable developers to create data agents for self-service data access and monetize their data to differentiate their offerings.

Conversational analytics

It used these APIs to create Looker’s Gemini conversational analytics experience. Combine with Looker’s enterprise-scale semantic layer business logic models. You can root AI with a single source of truth and uniform metrics across the enterprise. You may then use natural language to explore your data like Google Search.

LookML semantic data models let you build regulated metrics and semantic relationships between data models for your data agents. LookML models don’t only describe your data; you can query them to obtain it.

Data agents run on a dynamic data knowledge graph. BigQuery powers the dynamic knowledge graph, which connects data, actions, and relationships using usage patterns, metadata, historical trends, and more.

Last but not least, Gemini in BigQuery is now broadly accessible, assisting data teams with data migration, preparation, code assist, and insights. Your business and analyst teams can now talk with your data and get insights in seconds, fostering a data-driven culture. Ready-to-run queries and AI-assisted data preparation in BigQuery Studio allow natural language pipeline building and decrease guesswork.

Connect all your data to AI by migrating it to BigQuery with the data migration application. This product roadmap webcast covers BigQuery platform updates.

Read more on Govindhtech.com

#DataAgents#BigQuery#BigQuerypipesyntax#vectorsearch#BigQueryDataFrames#BigQueryanalytics#LookMLmodels#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Building a knowledge graph with topic networks in Amazon Neptune

This is a guest blog post by By Edward Brown, Head of AI Projects, Eduardo Piairo, Architect, Marcia Oliveira, Lead Data Scientist, and Jack Hampson, CEO at Deeper Insights. We originally developed our Amazon Neptune-based knowledge graph to extract knowledge from a large textual dataset using high-level semantic queries. This resource would serve as the backend to a simplified, visual web-based knowledge extraction service. Given the sheer scale of the project—the amount of textual data, plus the overhead of the semantic data with which it was enriched—a robust, scalable graph database infrastructure was essential. In this post, we explain how we used Amazon’s graph database service, Neptune, along with complementing Amazon technologies, to solve this problem. Some of our more recent projects have required that we build knowledge graphs of this sort from different datasets. So as we proceed with our explanation, we use these as examples. But first we should explain why we decided on a knowledge graph approach for these projects in the first place. Our first dataset consists of medical papers describing research on COVID-19. During the height of the pandemic, researchers and clinicians were struggling with over 4,000 papers on the subject released every week and needed to onboard the latest information and best practices in as short a time as possible. To do this, we wanted to allow users to create their own views on the data—custom topics or themes—to which they could then subscribe via email updates. New and interesting facts within those themes that appear as new papers are added to our corpus arrive as alerts to the user’s inbox. Allowing a user to design these themes required an interactive knowledge extraction service. This eventually became the Covid Insights Platform. The platform uses a Neptune-based knowledge graph and topic network that takes over 128,000 research papers, connects key concepts within the data, and visually represents these themes on the front end. Our second use case originated with a client who wanted to mine articles discussing employment and occupational trends to yield competitor intelligence. This also required that the data be queried by the client at a broad, conceptual level, where those queries understand domain concepts like companies, occupational roles, skill sets, and more. Therefore, a knowledge graph was a good fit for this project. We think this article will be interesting to NLP specialists, data scientists, and developers alike, because it covers a range of disciplines, including network sciences, knowledge graph development, and deployment using the latest knowledge graph services from AWS. Datasets and resources We used the following raw textual datasets in these projects: CORD-19 – CORD-19 is a publicly available resource of over 167,000 scholarly articles, including over 79,000 with full text, about COVID-19, SARS-CoV-2, and related coronaviruses. Client Occupational Data – A private national dataset consisting of articles pertaining to employment and occupational trends and issues. Used for mining competitor intelligence. To provide our knowledge graphs with structure relevant to each domain, we used the following resources: Unified Medical Language System (UMLS) – The UMLS integrates and distributes key terminology, classification and coding standards, and associated resources to promote creating effective and interoperable biomedical information systems and services, including electronic health records. Occupational Information Network (O*NET) – The O*NET is a free online database that contains hundreds of occupational definitions to help students, job seekers, businesses, and workforce development professionals understand today’s world of work in the US. Adding knowledge to the graph In this section, we outline our practical approach to creating knowledge graphs on Neptune, including the technical details and libraries and models used. To create a knowledge graph from text, the text needs be given some sort of structure that maps the text onto the primitive concepts of a graph like vertices and edges. A simple way to do this is to treat each document as a vertex, and connect it via a has_text edge to a Text vertex that contains the Document content in its string property. But obviously this sort of structure is much too crude for knowledge extraction—all graph and no knowledge. If we want our graph to be suitable for querying to obtain useful knowledge, we have to provide it with a much richer structure. Ultimately, we decided to add the relevant structure—and therefore the relevant knowledge—using a number of complementary approaches that we detail in the following sections, namely the domain ontology approach, the generic semantic approach, and the topic network approach. Domain ontology knowledge This approach involves selecting resources relevant to the dataset (in our case, UMLS for biomedical text or O*NET for documents pertaining to occupational trends) and using those to identify the concepts in the text. From there, we link back to a knowledge base providing additional information about those concepts, thereby providing a background taxonomical structure according to which those concepts interrelate. That all sounds a bit abstract, granted, but can be clarified with some basic examples. Imagine we find the following sentence in a document: Semiconductor manufacturer Acme hired 1,000 new materials engineers last month. This text contains a number of concepts (called entities) that appear in our relevant ontology (O*NET), namely the field of semiconductor manufacturers, the concept of staff expansion described by “hired,” and the occupational role materials engineer. After we align these concepts to our ontology, we get access to a huge amount of background information contained in its underlying knowledge base. For example, we know that the sentence mentions not only an occupational role with a SOC code of 17-2131.00, but that this role requires critical thinking, knowledge of math and chemistry, experience with software like MATLAB and AutoCAD, and more. In the biomedical context, on the other hand, the text “SARS-coronavirus,” “SARS virus,” and “severe acute respiratory syndrome virus” are no longer just three different, meaningless strings, but a single concept—Human Coronavirus with a Concept ID of C1175743—and therefore a type of RNA virus, something that could be treated by a vaccine, and so on. In terms of implementation, we parsed both contexts—biomedical and occupational—in Python using the spaCy NLP library. For the biomedical corpus, we used a pretrained spaCy pipeline, SciSpacy, released by Allen NLP. The pipeline consists of tokenizers, syntactic parsers, and named entity recognizers retrained on biomedical corpora, along with named entity linkers to map entities back to their UMLS concept IDs. These resources turned out to be really useful because they saved a significant amount of work. For the occupational corpora, on the other hand, we weren’t quite as lucky. Ultimately, we needed to develop our own pipeline containing named entity recognizers trained on the dataset and write our own named entity linker to map those entities to their corresponding O*NET SOC codes. In any event, hopefully it’s now clear that aligning text to domain-specific resources radically increases the level of knowledge encoded into the concepts in that text (which pertain to the vertices of our graph). But what we haven’t yet done is taken advantage of the information in the text itself—in other words, the relations between those concepts (which pertain to graph edges) that the text is asserting. To do that, we extract the generic semantic knowledge expressed in the text itself. Generic semantic knowledge This approach is generic in that it could in principle be applied to any textual data, without any domain-specific resources (although of course models fine-tuned on text in the relevant domain perform better in practice). This step aims to enrich our graph with the semantic information the text expresses between its concepts. We return to our previous example: Semiconductor manufacturer Acme hired 1,000 new materials engineers last month. As mentioned, we already added the concepts in this sentence as graph vertices. What we want to do now is create the appropriate relations between them to reflect what the sentence is saying—to identify which concepts should be connected by edges like hasSubject, hasObject, and so on. To implement this, we used a pretrained Open Information Extraction pipeline, based on a deep BiLSTM sequence prediction model, also released by Allen NLP. Even so, had we discovered them sooner, we would likely have moved these parts of our pipelines to Amazon Comprehend and Amazon Comprehend Medical, which specializes in biomedical entity and relation extraction. In any event, we can now link the concepts we identified in the previous step—the company (Acme), the verb (hired) and the role (materials engineer)—to reflect the full knowledge expressed by both our domain-specific resource and the text itself. This in turn allows this sort of knowledge to be surfaced by way of graph queries. Querying the knowledge-enriched graph By this point, we had a knowledge graph that allowed for some very powerful low-level queries. In this section, we provide some simplified examples using Gremlin. Some aspects of these queries (like searching for concepts using vertex properties and strings like COVID-19) are aimed at readability in the context of this post, and are much less efficient than they could be (by instead using the vertex ID for that concept, which exploits a unique Neptune index). In our production environments, queries required this kind of refactoring to maximize efficiency. For example, we can (in our biomedical text) query for anything that produces a body substance, without knowing in advance which concepts meet that definition: g.V().out('entity').where(out('instance_of').hasLabel('SemanticType').out('produces').has('name','Body Substance')). We can also find all mentions of things that denote age groups (such as “child” or “adults”): g.V().out('entity').where(out('instance_of').hasLabel('SemanticType').in('isa').has('name','Age Group')). The graph further allowed us to write more complicated queries, like the following, which extracts information regarding the seasonality of transmission of COVID-19: // Seasonality of transmission. g.V().hasLabel('Document'). as('DocID'). out('section').out('sentence'). as('Sentence'). out('entity').has('name', within( 'summer', 'winter', 'spring (season)', 'Autumn', 'Holidays', 'Seasons', 'Seasonal course', 'seasonality', 'Seasonal Variation' ) ).as('Concept').as('SemanticTypes').select('Sentence'). out('entity').has('name','COVID-19').as('COVID'). dedup(). select('Concept','SemanticTypes','Sentence','DocID'). by(valueMap('name','text')). by(out('instance_of').hasLabel('SemanticType').values('name').fold()). by(values("text")). by(values("sid")). dedup(). limit(10) The following table summarizes the output of this query. Concept Sentence seasonality COVID-19 has weak seasonality in its transmission, unlike influenza. Seasons Furthermore, the pathogens causing pneumonia in patients without COVID-19 were not identified, therefore we were unable to evaluate the prevalence of other common viral pneumonias of this season, such as influenza pneumonia and All rights reserved. Holidays The COVID-19 outbreak, however, began to occur and escalate in a special holiday period in China (about 20 days surrounding the Lunar New Year), during which a huge volume of intercity travel took place, resulting in outbreaks in multiple regions connected by an active transportation network. spring (season) Results indicate that the doubling time correlates positively with temperature and inversely with humidity, suggesting that a decrease in the rate of progression of COVID-19 with the arrival of spring and summer in the north hemisphere. summer This means that, with spring and summer, the rate of progression of COVID-19 is expected to be slower. In the same way, we can query our occupational dataset for companies that expanded with respect to roles that required knowledge of AutoCAD: g.V(). hasLabel('Sentence').as('Sentence'). out('hasConcept'). hasLabel('Role').as('Role'). where( out('hasTechSkill').has('name', 'AutoCAD') ). in('hasObject'). hasLabel('Expansion'). out('hasSubject'). hasLabel('Company').as('Company'). select('Role', 'Company', 'Sentence'). by('socCode'). by('name'). by('text'). limit(1) The following table shows our output. Role Company Sentence 17-2131.00 Acme Semiconductor manufacturer Acme hired 1,000 new materials engineers last month. We’ve now described how we added knowledge from domain-specific resources to the concepts in our graph and combined that with the relations between them described by the text. This already represents a very rich understanding of the text at the level of the individual sentence and phrase. However, beyond this very low-level interpretation, we also wanted our graph to contain much higher-level knowledge, an understanding of the concepts and relations that exist across the dataset as a whole. This leads to our final approach to enriching our graph with knowledge: the topic network. Topic network The core premise behind the topic network is fairly simple: if certain pairs of concepts appear together frequently, they are likely related, and likely share a common theme. This is true even if (or especially if) these co-occurrences occur across multiple documents. Many of these relations are obvious—like the close connection between Lockheed and aerospace engineers, in our employment data—but many are more informative, especially if the user isn’t yet a domain expert. We wanted our system to understand these trends and to present them to the user—trends across the dataset as a whole, but also limited to specific areas of interest or topics. Additionally, this information allows a user to interact with the data via a visualization, browsing the broad trends in the data and clicking through to see examples of those relations at the document and sentence level. To implement this, we combined methods from the fields of network science and traditional information retrieval. The former told us the best structure for our topic network, namely an undirected weighted network, where the concepts we specified are connected to each other by a weighted edge if they co-occur in the same context (the same phrase or sentence). The next question was how to weight those edges—in other words, how to represent the strength of the relationship between the two concepts in question. Edge weighting A tempting answer here is just to use the raw co-occurrence count between any two concepts as the edge weight. However, this leads to concepts of interest having strong relationships to overly general and uninformative terms. For example, the strongest relationships to a concept like COVID-19 are ones like patient or cell. This is not because these concepts are especially related to COVID-19, but because they are so common in all documents. Conversely, a truly informative relationship is between terms that are uncommon across the data as a whole, but commonly seen together. A very similar problem is well understood in the field of traditional information retrieval. Here the informativeness of a search term in a document is weighted using its frequency in that document and its rareness in the data as a whole, using a formula like TFIDF (term frequency by inverse document frequency). This formula however aims at scoring a single word, as opposed to the co-occurrence of two concepts as we have in our graph. Even so, it’s fairly simple to adapt the score to our own use case and instead measure the informativeness of a given pair of concepts: In our first term, we count the number of sentences, s, where both concepts, c1 and c2, occur (raw co-occurrence). In the second, we take the sum of the number of documents where each term appears relative to (twice) the total number of documents in the data. The result is that pairs that often appear together but are both individually rare are scored higher. Topics and communities As mentioned, we were interested in allowing our graph to display not only global trends and co-occurrences, but also those within a certain specialization or topic. Analyzing the co-occurrence network at the topic level allows users to obtain information on themes that are important to them, without being overloaded with information. We identified these subgraphs (also known in the network science domain as communities) as groups centered around certain concepts key to specific topics, and, in the case of the COVID-19 data, had a medical consultant curate these candidates into a final set—diagnosis, prevention, management, and so on. Our web front end allows you to navigate the trends within these topics in an intuitive, visual way, which has proven to be very powerful in conveying meaningful information hidden in the corpus. For an example of this kind of topic-based navigation, see our publicly accessible COVID-19 Insights Platform. We’ve now seen how to enrich a graph with knowledge in a number of different forms, and from several different sources: a domain ontology, generic semantic information in the text, and a higher-level topic network. In the next section, we go into further detail about how we implemented the graph on the AWS platform. Services used The following diagram gives an overview of our AWS infrastructure for knowledge graphs. We reference this as we go along in this section. Amazon Neptune and Apache TinkerPop Gremlin Although we initially considered setting up our own Apache TinkerPop service, none of us were experts with graph database infrastructure, and we found the learning curve around self-hosted graphs (such as JanusGraph) extremely steep given the time constraints on our project. This was exacerbated by the fact that we didn’t know how many users might be querying our graph at once, so rapid and simple scalability was another requirement. Fortunately, Neptune is a managed graph database service that allows storing and querying large graphs in a performant and scalable way. This also pushed us towards Neptune’s native query language, Gremlin, which was also a plus because it’s the language the team had most experience with, and provides a DSL for writing queries in Python, our house language. Neptune allowed us to solve the first challenge: representing a large textual dataset as a knowledge graph that scales well to serving concurrent, computationally intensive queries. With these basics in place, we needed to build out the AWS infrastructure around the rest of the project. Proteus Beyond Neptune, our solution involved two API services—Feather and Proteus—to allow communication between Neptune and its API, and to the outside world. More specifically, Proteus is responsible for managing the administration activities of the Neptune cluster, such as: Retrieving the status of the cluster Retrieving a summary of the queries currently running Bulk data loading The last activity, bulk data loading, proved especially important because we were dealing with a very large volume of data, and one that will grow with time. Bulk data loading This process involved two steps. The first was developing code to integrate the Python-based parsing we described earlier with a final pipeline step to convert the relevant spaCy objects—the nested data structures containing documents, entities, and spans representing predicates, subjects, and objects—into a language Neptune understands. Thankfully, the spaCy API is convenient and well-documented, so flattening out those structures into a Gremlin insert query is fairly straightforward. Even so, importing such a large dataset via Gremlin-language INSERT statements, or addVertex and addEdge steps, would have been unworkably slow. To load more efficiently, Neptune supports bulk loading from the Amazon Simple Storage Service (S3). This meant an additional modification to our pipeline to write output in the Neptune CSV bulk load format, consisting of two large files: one for vertices and one for edges. After a little wrangling with datatypes, we had a seamless parse-and-load process. The following diagram illustrates our architecture. It’s also worth mentioning that in addition to the CSV format for Gremlin, the Neptune bulk loader supports popular formats such as RDF4XML for RDF (Resource Description Framework). Feather The other service that communicates with Neptune, Feather, manages the interaction between the user (through the front end) and Neptune instance. It’s responsible for querying the database and delivering the results to the front end. The front-end service is responsible for the visual representation of the data provided by the Neptune database. The following screenshot shows our front end displaying our biomedical corpus. On the left we can see the topic-based graph browser showing the concepts most closely related to COVID-19. In the right-hand panel we have the document list, showing where those relations were found in biomedical papers at the phrase and sentence level. Lastly, the mail service allows you to subscribe to receive alerts around new themes as more data is added to the corpus, such as the COVID-19 Insights Newsletter. Infrastructure Owing to the Neptune security configuration, any incoming connections are only possible from within the same Amazon Virtual Private Cloud (Amazon VPC). Because of this, our VPC contains the following resources: Neptune cluster – We used two instances: a primary instance (also known as the writer, which allows both read and write) and a replica (the reader, which only allows read operations) Amazon EKS – A managed Amazon Elastic Kubernetes Service (Amazon EKS) cluster with three nodes used to host all our services Amazon ElastiCache for Redis – A managed Amazon ElastiCache for Redis instance that we use to cache the results of commonly run queries Lessons and potential improvements Manual provisioning (using the AWS Management Console) of the Neptune cluster is quite simple because at the server level, we only need to deal with a few primary concepts: cluster, primary instance and replicas, cluster and instance parameters, and snapshots. One of the features that we found most useful was the bulk loader, mainly due to our large volume of data. We soon discovered that updating a huge graph was really painful. To mitigate this, each time a new import was required, we created a new Neptune cluster, bulk loaded the data, and redirected our services to the new instance. Additional functionality we found extremely useful, but have not highlighted specifically, is the AWS Identity and Access Management (IAM) database authentication, encryption, backups, and the associated HTTPS REST endpoints. Despite being very powerful, with a bit of a steep learning curve initially, they were simple enough to use in practice and overall greatly simplified this part of the workflow. Conclusion In this post, we explained how we used the graph database service Amazon Neptune with complementing Amazon technologies to build a robust, scalable graph database infrastructure to extract knowledge from a large textual dataset using high-level semantic queries. We chose this approach since the data needed to be queried by the client at a broad, conceptual level, and therefore was the perfect candidate for a Knowledge Graph. Given Neptune is a managed graph database with the ability to query a graph with milliseconds latency it meant that the ease of setup if would afford us in our time-poor scenario made it the ideal choice. It was a bonus that we’d not considered at the project outset to be able to use other AWS services such as the bulk loader that also saved us a lot of time. Altogether we were able to get the Covid Insights Platform live within 8 weeks with a team of just three; Data Scientist, DevOps and Full Stack Engineer. We’ve had great success with the platform, with researchers from the UK’s NHS using it daily. Dr Matt Morgan, Critical Care Research Lead for Wales said “The Covid Insights platform has the potential to change the way that research is discovered, assessed and accessed for the benefits of science and medicine.” To see Neptune in action and to view our Covid Insights Platform, visit https://covid.deeperinsights.com We’d love your feedback or questions. Email [email protected] https://aws.amazon.com/blogs/database/building-a-knowledge-graph-with-topic-networks-in-amazon-neptune/

0 notes

Text

Google Cloud rolls out Vertex AI

Google in its I/O 2021 conference, the cloud provider announced the general availability of Vertex AI, a managed machine learning platform designed to accelerate the deployment and maintenance of artificial intelligence models.

Using Vertex AI, engineers can manage image, video, text, and tabular datasets, build machine learning pipelines to train and evaluate models using Google Cloud algorithms or custom training code. They can then deploy models for online or batch use cases all on scalable managed infrastructure.

1 note

·

View note

Text

AI startup Nucleai has immuno-oncology treatment failures in its sights

Israeli company Nucleai is putting artificial intelligence to work to discover why so many cancer patients don’t respond to immunotherapies, with the help of Swiss drugmaker Debiopharm.

The Tel Aviv-based startup is drawing on expertise garnered by Nucleai’s co-founder and chief executive – Avi Veidman – from his former role deploying AI for Israel’s military.

The same machine vision and learning approaches that military has used to glean intelligence about potential targets from geospatial data and satellite and high-altitude images are now being deployed in the fight against cancer, says the company.

Debiopharm led the $6.5 million first-round fundraising for Nucleai, which adds to $5 million raised earlier from other investors including Vertex Ventures and Grove Ventures, and could increase in the coming weeks as it is not fully closed.

First formed in 2017, Nucleai has now come out of stealth mode with the aim of funding biomarkers that will be able to predict which patients will respond to immuno-oncology treatments.

At the moment, these drugs are only effective in 20% to 30% of cases, according to the biotech, and working out which patients will get a benefit could make sure they are only used in those likely to respond, which could save healthcare systems money.

Nucleai says its platform involves modelling the spatial characteristics of both the tumour and the patient’s immune system, using computer vision and machine learning methods. The approach allows it to create “unique signatures that are predictive of patient response,” it adds.

Researchers from the biotech presented some insights into its “precision oncology” platform in a poster presentation at this year’s virtual ASCO congress.

They studied slide images from more than 900 primary breast cancer patients, scanning the images using an automated system for the presence of immune cells and other features, and looking for patterns that could predict how long a patient would go before their disease progressed.

Localised clusters of tumour-infiltrating lymphocytes and having more lymphocytes in the stroma (the margin between malignant and healthy tissues) than in the tumour itself seemed to be associated with a longer progression-free interval (PFI) and potentially could help guide treatment decisions.

According to Veidman, Debiopharm’s involvement brings “decades of pharmaceutical expertise which will assist in accelerating our development and market reach.”

The Swiss drugmaker is already an established player in cancer with drugs like Eloxatin (oxaliplatin) and Decapeptyl (triptorelin), and is developing a pipeline of cancer immunotherapies headed by Debio 1143, an IAP inhibitor in mid-stage testing for head and neck cancer.

“The battle between the tumour and the immune cells is clearly visible by inspecting the pathology slide, just like a satellite image of a battlefield,” says Veidman.

“Our AI platform analyses the hundreds of thousands of cells in a slide, examines the interplay between the tumour and the immune system and matches the right patient to the right drug based on these characteristics.”

The post AI startup Nucleai has immuno-oncology treatment failures in its sights appeared first on .

from https://pharmaphorum.com/news/ai-startup-nucleai-has-immuno-oncology-treatment-failures-in-its-sights/

0 notes

Text

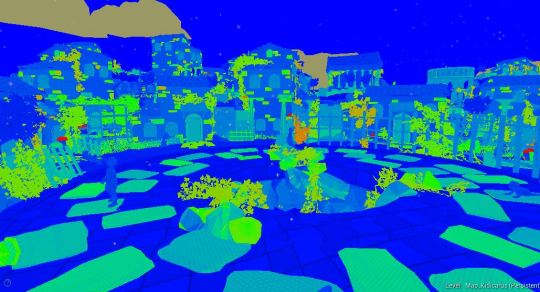

TP2 - Sem 08 (8 au 15 déc)

Cette semaine était la dernière semaine de prod et on avait BEAUCOUP de pain sur la planche! On n’a pas chaumé en tout cas! J’ai commencé par faire des modifications sur certains assets car leur normale sortait vraiment mal dans l’engin. Avec l’aide de Nina j’ai compris que pour que ça sorte bien, il fallait utiliser un seul smoothing group et unify les normales avant de réimporter.

J’ai fait les modifications pour les assets suivants : fenêtres, portes, chemins, rebord de chemin, poutre de chemins, tous les toîts de tuiles. J’en ai profité pour modifier les collisions des chemins puisqu’elles causaient problèmes. J’ai également fait les collisions des maisons (parce qu’elles n’étaient pas faites et je ne voulais pas Unreal les génère automatiquement à l’import).

Suite à des playtests et quelques commentaires que j’ai reçus, j’ai modifié l’habillage du pont brisé pour “remplir” un peu le vide (plus de murs sur les côtés, plus de destruction). J’en ai profité pour polish également l’habillage du pont en général (plus de statues).

J’ai ensuite construit 5 prefabs de maisons différentes, avec une porte en avant et une porte en arrière et des compositions différentes pour un maximum de réutilisation sans que l’on ressente la répétition. J’ai aussi récupéré mes dales (en petit) pour créer des briques afin de briser les lignes droites sur les maisons. J’ai créé un autre material instance pour modifier leur couleur afin qu’elles ressemblent davantage à des briques.

Par la suite, j’ai fait des tests de baking de lumières (encore et oui) et avec l’aide de Bernard, nous avons trouvé une “recette” parfaite pour un baking seamless avec un beau résultat. Pour ce faire, j’ai du repasser par-dessus tous les assets et foliage du jeu afin de modifier la résolution de leur lightmaps, j’ai aussi repasser sur tous les prefabs pour mettre leur statics meshes en “static” car Unreal les met automatiquement en Movable lors de la création du-dit prefab.

Cela étant fait, avec Bianka, nous avons créé des prefabs d’îles pour réutilisation dans l’environnement. J’ai aussi créé une île “vierge” pour habiller le ciel à la sortie du Underworld.

Ensuite je me suis attaqué à l’habillage de la ville. Bianka avait fait un setup de construction de la ville et avons séparé le travail à deux. Je me suis occupée du centre ville et d’habiller l’arène au centre de la ville.

J’ai du faire du back and forth afin de m’assurer que le joueur ne pouvait pas sortir de l’arène avec un simple jump et qu’il ne sorte pas de la zone de caméra lors du combat, il m’a donc fallu “fermé” le centre-ville. Le défi était de faire ceci sans rendre l’arène claustrophobique et de montrer la destruction de la ville.

J’ai continuer d’habiller la ville, ce fut tout de même long tout faire. Il m’a fallu y aller molo sur les vignes et le foliage, surtout dans les zones qui ne sont pas gameplay, afin de ne pas trop bouffer de performances. Au final, j’ai polish mes compostions, fait le vertex paint, ajouté le foliage et ajouté des fx (feuilles qui tombent, dust dans l’air et lucioles). Voici la zone que j’ai habillée, suivie de screenshots de différents endroits dans la ville.

Je suis vraiment fière du résultat ! Avec les îles flottantes autour (habillées et positionnées par Bianka) ça sort vraiment bien, on dirait vraiment une grande et belle cité dans le ciel !

Afin d’économiser les performances du jeu, nous avons créés différents sublevels pour “activer” la visibilité de certains sublevels seulement quand nous arrivons proche de ceux-ci. J’ai donc transféré tout les assets qui sont dit “de détails” (non visible de loin et qui ne modifie pas la silhouette générale de la ville) dans un niveau spécifique dont la visibilité est activée à la sortie du jardin seulement.

J’ai finalement build les lumières de tout le niveau. Le baking a pris près de 1h20, vu le nombre d’assets (incluant le foliage) répartis dans tout le monde, mais le résultat était très satisfaisant !

Au final, ce fut tout un projet, mais le résultat en a vallu la peine ! Notre scope pour l’environnement était vraiment gros, mais on y est arrivé ouf!

Ce que j’améliorerais personnellement :

M’occuper davantage de l’organisation et des réunions d’équipe

Donner plus de feedback aux autres départements sur leur travail et playtester plus régulièrement le jeu

Commencer les maisons beaucoup plus tôt, puisqu’elles ont été un vrai casse-tête pour moi (première fois que je faisais un kit modulaire) / Prendre moins de temps sur les mautadines de maisons pour mettre mon énergie ailleurs.

Une meilleure communication de ma part avec certains membres de l’équipe.

Ne pas abandonner Xavier avec le UI :(

Être plus souvent à l’école

Être plus assidue avec le blog

Ce qui s’est bien passé pour moi :

J’ai réapprivoisé ZBrush et son pipeline

J’ai appris énormément sur le fonctionnement des lumières, des lightmaps et tout le tralala qui vient avec (merci Bernard)

J’ai réussi à faire un kit de maisons modulaires !! (yay!)

Je suis fière de moi (et de nous) !

J’ai réussi à ne pas overstresser même si l’on était rushé, et surtout de ne pas avoir de répercussion négative sur mes coéquipiers afin de conserver le moral haut.

J’ai bien pris les critiques qu’on m’a donné et appliquer des changements en conséquence.

Merci à toute mon équipe pour l’amour et les efforts mis dans ce projet. Tous les départements ce sont vraiment donnés à fond pour produire ce jeu et nous sommes vraiment fiers de notre projet!

Sur ce, je vais aller dormir jusqu’en 2018 ! hehe ;)

0 notes

Text

Vertex Gen AI Evaluation Service for LLM Evaluation

How can you be certain that your large language models (LLMs) are producing the intended outcomes? How to pick the best model for your use case? Evaluating LLM results with Generative AI is difficult. Although these models are highly effective in many different activities, it is important to know how well they work in your particular use case.

An overview of a model’s capabilities can be found in leaderboards and technical reports, but selecting the right model for your requirements and assigning it to the right tasks requires a customised evaluation strategy.

In response to this difficulty, Google cloud launched the Vertex Gen AI Evaluation Service, which offers a toolset comprising Google cloud collection of well-tested and easily understandable techniques that is tailored to your particular use case. It gives you the ability to make wise choices at every stage of the development process, guaranteeing that your LLM apps perform to their greatest capacity.

This blog article describes the Vertex Gen AI Evaluation Service, demonstrates how to utilise it, and provides an example of how Generali Italia implemented a RAG-based LLM Application using the Gen AI Evaluation Service.

Using the Vertex Gen AI Evaluation Service to Assess LLMs

With the option of an interactive or asynchronous evaluation mode, the Gen AI Evaluation Service enables you to assess any model using Google cloud extensive collection of quality-controlled and comprehensible evaluators. They go into greater depth about these three dimensions in the section that follows:Image credit to Google cloud